Written by Johann Dettweiler, Chief Information Security Officer, stackArmor

Utilizing a “Risk Score” to Inform Risk-based Authorization of FedRAMP Systems

That was a mouthful…a lot of words to discuss what is a really interesting topic, and in my opinion, a bit of a “white rabbit” in the compliance and IT security world.

With all of the shakeups happening in the Federal world right now, it seems that FedRAMP is very interested in streamlining and re-designing their authorization process. In January of 2025 they released a blog describing a renewed focus on “delivery”, and prior to that released a number of blogs that focused on “streamlining” and making the overall FedRAMP authorization process more “agile”. And more recently, the launch of FedRAMP 20X explicitly talks about generating ideas on how we move away from a point in time paper-based compliance to continuous compliance.

An idea being tossed around is the use of a “Risk Score” for systems that can be used to inform risk-based authorization decisions and, potentially, accelerate the system authorization process. This idea is neat, but as with all things “IT security”, there are caveats…and pitfalls. In this post we propose the stackArmor Cyber Maturity Score (TM) that combines traditional controls risk with threats to provide an actionable risk assessment tool.

Leveraging the Past

The use of a “Risk Score” isn’t novel. In late 2023, StateRAMP introduced their StateRAMP Security Snapshot Criteria and Scoring process. The StateRAMP Standards and Technical Committee updated the criteria and scoring to align with NIST 800-53 Rev. 5 and the MITRE ATT&CK framework. The new criteria prioritize the highest-scoring MITRE ATT&CK threat controls, emphasizing best practices for improved security defense.

This prioritization and scoring was based on work that FedRAMP introduced in their Threat-Based Risk Profiling Methodology White Paper. In this white paper, they outline a process by which a Control Protection Value utilizing specialized classification, scores and weights.

In 2022, FedRAMP and MITRE published the results of this scoring in a public-facing GitHub Repo. The Threat Analysis includes:

- Control Protection Values (CPV) – provides a listing of all security controls scored along with each control’s protection value.

- Control Rationale – provides the rationale, conditions, dependencies, and assumptions leveraged in the scoring of each control.

- Raw Scores – provides the protect, detect, and respond scores for each security control across all techniques of the MITRE ATT&CK Framework.

- Heat Map – provides the heatmap value for each technique in the MITRE ATT&CK Framework.

The white paper goes on to discuss utilizing a Security Control Assessment to establish an “Implementation Value” (IV) for each of the controls based on a determination of whether the control is “satisfied” or “other than satisfied”.

The white paper concludes with the idea of creating a system “Risk Profiles” based on the security capabilities listed in NISTIR 8011 and utilizing the implementation values for each control to determine an overall maturity level for each capability. Generally, this makes sense, but I think there may be a better way to approach this.

Let’s Use What We Got!

The creation of the CPVs really was a brilliant idea posed by FedRAMP. I think we should dust these off, update them based on the latest MITRE ATT&CK framework, and put these ideas to work to help us protect sensitive data.

However, instead of creating a Maturity score based on the NISTIR 11 security capabilities, why couldn’t the IVs of the control baseline drive the overall score of the system? These scores could then leverage the MITRE ATT&CK NIST 800-53 Rev. 5 Control mappings to drive risk-based decisions about the system. Let’s dive into an example of what could be done.

In this example, we’ll use a baseline that includes the following 8 NIST 800-53 security controls (Note: my professional security advice would be – 8 controls probably aren’t enough to secure your data):

CM-6, AU-6, IA-2, SI-3, CM-7, SI-7, AC-2, and AC-6

The CPVs of the following controls are:

For this example, we want to establish a Perfect Score Maturity Score for the “baseline” of controls that we’ve selected.

Perfect Score = Sum of all protection values

So, a perfect score for this baseline is 1153.72.

In order to determine how well we’ve implemented our terribly insufficient control baseline; we need to determine the Implementation Value (IV) of our “system”. I’m going to fudge some numbers here for the sake of demonstration. Let’s assume our favorite 3PAO (you know who you are…wink, wink) has performed a deeply thorough examination of our control implementation and presented us with the following IVs for the “baseline”:

We now need to determine, the IVs for each of the controls based on the 3PAO assessment.

To do so, we multiply the CPV of each control by the Implementation %:

The actual Implementation of the score them becomes:

Implementation Score = Sum of all Implemented CPVs

So, the implementation score for this “system” is 945.76. And, because people love easily understood, simple numbers, we perform:

(Implementation Score/Perfect Score) * 100

To arrive at the revolutionary and utterly ground-breaking stackArmor CYBER MATURITY SCORE (TM)

81.97

Disappointed? But Wait, There’s More

Admittedly, that number, 81.97, by itself is stupid and meaningless. But if that number can be combined with the MITRE ATT&CK framework and Continuous Authorization to Operate (cATO) it starts to become far more meaningful.

Let’s start by thinking about cATO, and how that stackArmor CYBER MATURITY SCORE can help to provide dynamic system state tracking on an ongoing basis. In cATO, evidence is provided on an ongoing basis for a subset of controls. We’ll use the following as an example:

cATO Requirement – AC-2 – System must perform a quarterly review of user access and address deviations in accordance with account management processes.

Through the cATO processes, it is determined that the system has failed to review the accounts for a quarter as this impacts the system’s AC-2 Implemented CPV:

This change to the AC-2 Implemented CPC impacts the overall stackArmor CYBER MATURITY SCORE (TM):

78.59

This immediate change to a singular score will prompt appropriate review and require explanation by the system. Of course, the expectation would be to create an appropriate Plan of Action and Milestones (POA&M) entry to address the finding. Upon fixing the issue, let’s assume the following:

The system has finally started getting it together, the POA&M Item for AC-2 quarterly access reviews has been addressed and validated, in addition, they’ve managed to implement a number of other fixes to address other POA&M items that have had major impacts on the Implemented CPV for the system.

Through cATO, the score is dynamically updated based on the system’s ability to address POA&M issues and increase their overall Implemented CPV. The stackArmor CYBER MATURITY SCORE now sits at:

90.15

Through the use of a single dynamically updated “score”, an Authorizing Official can easy a system’s status and have an instant understanding of incremental changes to the system risk level.

A Number is Just a Number

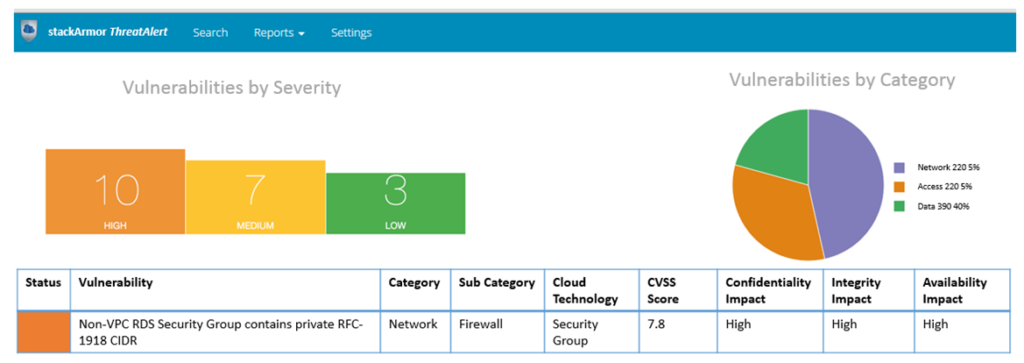

At the end of the day, it’s neat, but it’s still just a number. In addition, a system’s POA&M may have items related to control implementation deficiencies, but how does that account for vulnerability scan findings that are commonly enumerated utilizing Common Vulnerabilities and Exposures (CVEs) and the Common Vulnerability Scoring System (CVSS)?

Utilizing the Implemented CPV values can have a major impact on understanding how a CVE and its associated CVSS score actually impacts as system. Let’s leverage the MITRE ATT&CK Known Exploited Vulnerabilities Mapping to get a better understanding of some real world risk.

Vulnerability Scanning of the System has been performed and CVE-2021-21975: VMware Server Side Request Forgery in vRealize Operations Manager API has been detected on the system. This is identified as a Known Exploited Vulnerability (KEV) and is associated with ATT&CK Technique T1190: Exploit Public-Facing Application.

To make matters so much worse, the CVE has a CVSS score of 7.5 and is Network exploitable with a Low Attack Complexity.

As the AO, you consult your ATT&CK Technique which has been constructed based on your current understanding of the Implemented CPV values.

From this heat map you understand that this that there is a pretty significant risk to your system. Your understanding of Implemented CPVs shows that the AC-2, AU-6 and SI-3 may contribute to a potential threat vector being able to exploit this vulnerability on your system.

More than just understanding the CVSS score of the vulnerability, you also understand how that score impacts your system based on the control implementation. Given this, you can now prioritize this risk and dedicate appropriate resources to properly resolving it.

But Why Can’t I Just Use the Numbers on my POA&M

I have an anecdotal story about a federal agency that based its risk decisions on the number of POA&M Items their systems had. They would literally order the systems from most-to-least risk based on the count of the number of POA&M items. I was heavily involved with a particular system where we went above and beyond to do our due diligence to accurately report findings and ensure that we utilized the POA&M as a proper way to track finding remediation. Of course, this resulted in a lot of POA&M items.

There was another system team that wasn’t quite so diligent in their application of security controls or tracking of findings. In fact, they carried a single POA&M item for RA-5. The POA&M item stated that the system did not perform vulnerability scanning, like at all, it simply did not scan any of the hosts within its authorization boundary. In a monthly risk review, one of the agency’s high-level security managers actually said out loud that they wanted more systems to be like this system that only carried a single POA&M, and that it was all about “reducing the number of POA&M items”.

I wish I could say this was the only time I’ve seen this, but the use of the number of POA&M items a system carries to somehow inform decision makers of the risk-level of a system runs rampant in the security world.

The number of POA&M items a system has should never be considered an indicator of system risk. Full stop…period.

In fact, I would argue that, in some cases, a system that carries more POA&M items is actually more secure and carrying less risk than a system where findings are obfuscated through poor capabilities around control implementation, compliance, process and continuous monitoring. Ultimately, a POA&M is a process, it’s one way to assign resources and implement impactful changes to a system. By itself, it is not a good indicator of system risk.

However, if you take the example above where the CVE of a finding is evaluated against the actual implementation of controls to address the vectors by which an attacker might exploit a system weakness using a system’s stackArmor CYBER MATURITY SCORE, decision makers are empowered with the tools needed to make critical, risk-based decisions. With a deep understanding of the strengths and weaknesses of a system at the control implementation level, POA&M items can be prioritized and categorized based on the real-world risk they present to a system. This type of risk-informed decision making is critical in today’s ever evolving security threat landscape.

Roadmap – What Needs to be Done

I think creating a “Risk Score” for FedRAMP that can be used by agencies to quickly understand and evaluate the true risk of a system is a logical and achievable goal. FedRAMP already laid a large portion of the groundwork back in 2022 through their work with MITRE to create CPVs. Here are some steps to take to resurrect the idea of a Threat-Based System profile:

- Update the CPVs based on the most recent MITRE ATT&CK framework (v16.1) and re-create the breakdown of the scoring at the control requirement level. While I was able to find the overall control CPV values in MITRE’s GitHub repo, the detailed breakdown of the scoring used to achieve those values seems to have been lost to time.

- Refresh the MITRE NIST 800-53 control mapping to include the latest version of the ATT&CK framework and accommodate all of the latest ATT&CK techniques.

- Expand the MITRE KEV mappings to more than just KEVs. In 2021, MITRE defined a methodology for using MITRE ATT&CK to characterize the impact of a vulnerability as described in the CVE list. There is an archived GitHub repo that describes the process and methodology.

- Establish a body that is responsible for utilizing the CVE to ATT&CK mapping methodology to publish mappings for CVEs to be utilized by system personnel to better understand how system findings can be evaluated using the stackArmor CYBER MATURITY SCORE to make more informed, risk-based decisions when creating POA&M items.

- Establish a process by which systems can make their stackArmor CYBER MATURITY SCORE known to agencies and create thresholds and processes by which the score can be continuously monitored throughout the system’s lifecycle.

Obviously, there’s a lot more that needs to be done, but getting a working model in place and operating, leveraging the groundwork that has already been laid, is something that could happen in a matter of months, not years.

Conclusion

The creation and utilization of a “Risk Score” to allow agencies to make better, more risk-informed decisions when leveraging FedRAMP systems isn’t a silver bullet. The “garbage in, garbage out” problem, at the root of so many things cyber security, will still be present. However, given the level of quality work that has already been dedicated to creating a Threat-based way of understanding a system’s risk, I believe with a little updating and refinement, creating a stackArmor CYBER MATURITY SCORE to normalize the understanding of system risk is a worthwhile effort. This relatively simple milestone could provide another needed arrow in agency decisions makers’ quiver to be make better-informed, risk-based decisions.