Extending Existing Risk Management Frameworks to AI Systems can help Accelerate Safe Adoption of AI to Drive Performance

Efficiencies in Public Sector and Critical Infrastructure Industries

The Whitehouse Executive Order (EO 144110) on the Safe, Secure, and Trustworthy Development and Use of Artificial Intelligence directs Agency Chief Information Officers, Chief Information Security Officers, and authorizing officials to operationalize generative AI and other critical and emerging technologies. Agencies must incorporate risk management tailored to AI systems. The NIST AI Risk Management Framework (NIST AI 100-1) helps manage the many risks of AI and promote trustworthy and responsible development and use of AI systems. Given the stringent timelines associated with implementing strong governance and risk management protocols, agencies should consider augmenting and enhancing existing risk management models such NIST RMF and NIST SP 800-53 to define an implementable risk management profile for AI.

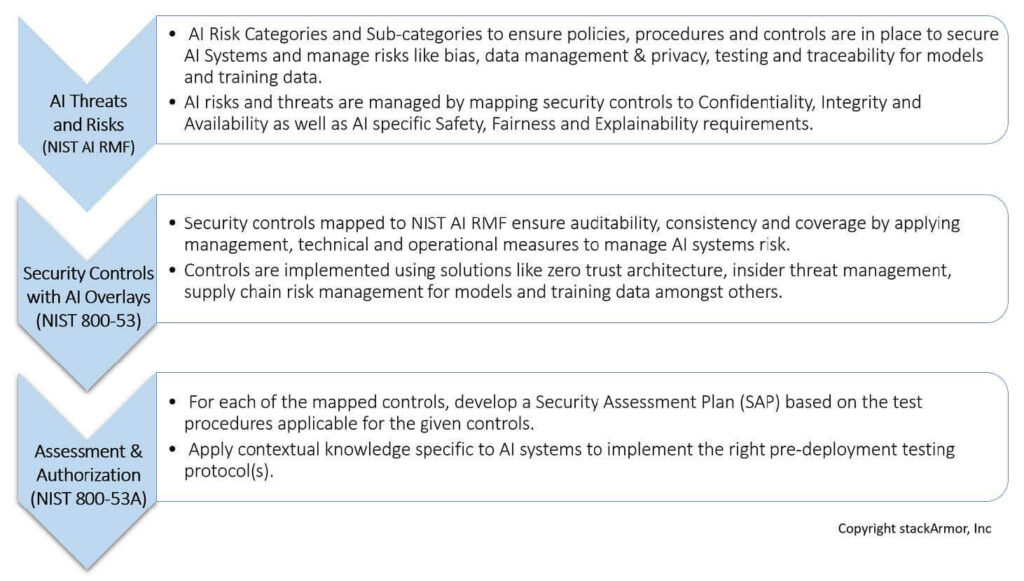

NIST AI RMF (NIST AI 100-1) provides a starting point for organizations to understand and assess risk associated with AI systems. Organizations must find systematic and consistent ways to enable actions to manage AI risks and responsibly develop trustworthy AI systems. To deploy trustworthy AI systems, the NIST AI RMF defines four functions: Govern, Map, Measure and Manage. Each of these high-level functions is broken down into categories and subcategories. Categories and subcategories are subdivided into specific actions and outcomes. Actions do not constitute a checklist, nor are they necessarily an ordered set of steps. Hence there is a need for an actionable set of instructions, steps and specific guidance on how best to implement risk management capabilities. This gap can be filled by mapping NIST AI RMF risk categories to NIST SP 800-53 controls and implementing a governance model that builds upon existing practices covering cybersecurity risk to now include AI specific risks like bias, safety and explainability. By starting with NIST AI RMF risk categories and mapping them to NIST SP 800-53 controls with AI overlays and executing the NIST RMF activities we get

Extending and adapting existing cyber risk management programs with AI overlays to address safety, bias and explainability can help public sector organizations start deploying AI systems faster – safely! stackArmor’s novel approach of mapping NIST AI RMF risk categories to security, governance, and risk management security controls codified in NIST SP 800-53, avoids the cost and time delay of creating a new governance framework. By tailoring control implementations specifically for managing AI Systems risk, organizations can reduce the time and cost of delivering secure and trustworthy AI. The ATO for AITM governance model allows risk management and security professionals that have experience with NIST RMF, FedRAMP, StateRAMP and NIST CSF to jumpstart immediate measures to address AI risk, security, and governance issues. By tailoring security control implementations specifically for managing AI Systems risk, organizations can reduce the time and cost of delivering secure and trustworthy AI. The ATO for AITM governance model allows risk management and security professionals that have experience with NIST RMF, FedRAMP, StateRAMP and NIST CSF to jumpstart immediate measures to address AI risk, security and governance issues. The benefits of using the NIST AI RMF mapping to NIST SP 800-53 security and privacy controls unlocks highly repeatable procedures for risk management familiar to security practitioners and provides a verifiable objective metric that helps drive measurable assessments. Additionally, once the applicable controls are identified, the associated testing and verification procedures can be also identified and applied as shown in the infographic below.

"Harnessing the power of AI for delivery of government mission and services will be transformational. But, it is complicated to align all the emerging policy, risk frameworks, approval processes and existing policy and law. I am thrilled to be included in the COE because I have seen the work of the stackArmor team to drill down to details and find a path to connect all the pieces. We can only get to use of operational AI at scale by working through these details. I hope the output of the COE will deliver tools that agencies can use to move faster and to confidently scale AI capabilities.”

Suzette Kent, Former Federal CIO. Ms. Kent as an extensive private and public sector background. As the Federal CIO, Ms. Kent was responsible for government-wide IT policies and spending, and also chaired the Federal CIO Council and the Technology Modernization Fund Board.

“Managing risk associated with AI systems is essential to ensuring Government’s ability to improve agency effectiveness and efficiency using next generation AI and Automated Decision Support systems. stackArmor’s systems engineering approach to applying NIST security controls to AI systems provides a reasonable blueprint for AI risk management.”

Richard Spires, Former DHS, and IRS CIO. Mr. Spires provides advice to companies and government agencies in strategy, digital transformation, operations, and business development. He previously served as the Chief Information Office (CIO) of the U.S. Department of Homeland Security (DHS) and as CIO of the Internal Revenue Service (IRS).

“The adoption of risk-based methods for managing and governing AI systems that leverage security controls defined in NIST SP 800-53 Rev 5 as well as established governance programs like FedRAMP can help agencies adopt AI more rapidly. Reducing the time and cost burden on agencies and supporting contractors by enhancing existing protocols is critical to ensuring timely deployment of AI systems for supporting the government mission.”

Maria Roat, Former Deputy Federal CIO, SBA CIO and Director, FedRAMP PMO. Ms. Roat is a Senior Information Technology and Cybersecurity Executive with 3+ decades’ experience driving enterprise-scale digital transformation within Federal Government. Recognized as builder, collaborator, and solutions innovator with vision, audacity, and drive to lead complex multibillion-dollar technology initiatives.

“ATO for AI offers government agencies a fiscally prudent pathway to safe and secure AI adoption that builds upon lessons learned upon implementing existing governance frameworks like FISMA and FedRAMP. stackArmor’s approach of operationalizing NIST AI RMF with actionable control implementations can help agencies accelerate safe AI systems adoption without having to retrain thousands of program, acquisition and IT specialists on new governance models for AI.”

Alan Thomas, Former Commissioner, Federal Acquisition Service, GSA. Mr. Thomas is an Operating executive and former Federal political appointee with more than 25 years delivering mission critical programs, championing large scale digital transformation initiatives, and building deep functional expertise in acquisition and procurement. He is currently the Chief Solutions Officer at Leadership Connect.

stackArmor provides FedRAMP, FISMA/RMF, and CMMC/DFARS compliance acceleration services on Amazon Web Services (AWS). stackArmor’s ThreatAlert® Security Platform reduces the time and cost of an ATO by 40%. We serve enterprise customers in Defense, Aerospace, Space, Government, and Healthcare markets as well as ISV’s looking to offer cloud solutions for Government.

Menu

Blog

© stackArmor. All Rights Reserved 2024.